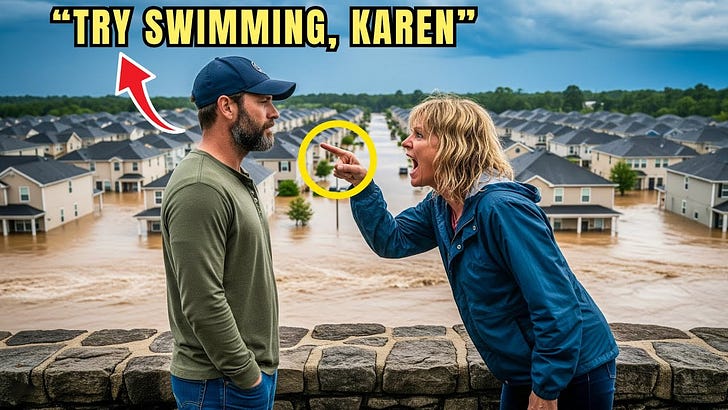

This Entire Youtube Story Is An AI Fake - Made To Increase Views And Ad Revenue - Another Example Of "Enshittification" ...

The people, the places - none of it exists. None of these events reported ever happened. Youtube is full of this crap, and it's getting more and more prevalent - the use of AI to generate fake news...

Here’s the video - the subtitles and the voiceover are pretty obviously bad AI slop, the images, as well…

And Youtube will not take it down - but they extensively censor real news - it’s the enshittification of yet another Internet platform:

Cory Doctorow

8 feb 2024

Last year, I coined the term “enshittification” to describe the way that platforms decay. That obscene little word did big numbers; it really hit the zeitgeist.

The American Dialect Society made it its Word of the Year for 2023 (which, I suppose, means that now I’m definitely getting a poop emoji on my tombstone).

So what’s enshittification and why did it catch fire? It’s my theory explaining how the internet was colonised by platforms, why all those platforms are degrading so quickly and thoroughly, why it matters and what we can do about it. We’re all living through a great enshittening, in which the services that matter to us, that we rely on, are turning into giant piles of shit. It’s frustrating. It’s demoralising. It’s even terrifying.

I think that the enshittification framework goes a long way to explaining it, moving us out of the mysterious realm of the “great forces of history”, and into the material world of specific decisions made by real people; decisions we can reverse and people whose names and pitchfork sizes we can learn.

Enshittification names the problem and proposes a solution. It’s not just a way to say “things are getting worse”, though, of course, it’s fine with me if you want to use it that way. (It’s an English word. We don’t have ein Rat für englische Rechtschreibung. English is a free-for-all. Go nuts, meine Kerle.) But in case you want to be more precise, let’s examine how enshittification works. It’s a three-stage process: first, platforms are good to their users. Then they abuse their users to make things better for their business customers. Finally, they abuse those business customers to claw back all the value for themselves. Then, there is a fourth stage: they die.

Let’s do a case study. What could be better than Facebook?

Facebook arose from a website developed to rate the fuckability of Harvard undergrads, and it only got worse after that. When Facebook started off, it was only open to US college and high-school kids with .edu and K-12.us addresses. But in 2006, it opened up to the general public. It effectively told them: Yes, I know you’re all using MySpace. But MySpace is owned by a billionaire who spies on you with every hour that God sends. Sign up with Facebook and we will never spy on you. Come and tell us who matters to you in this world.

That was stage one. Facebook had a surplus — its investors’ cash — and it allocated that surplus to its end users. Those end users proceeded to lock themselves into Facebook. Facebook, like most tech businesses, had network effects on its side. A product or service enjoys network effects when it improves as more people sign up to use it. You joined Facebook because your friends were there, and then others signed up because you were there.

But Facebook didn’t just have high network effects, it had high switching costs. Switching costs are everything you have to give up when you leave a product or service. In Facebook’s case, it was all the friends there that you followed and who followed you. In theory, you could have all just left for somewhere else; in practice, you were hamstrung by the collective action problem.

It’s hard to get lots of people to do the same thing at the same time. So Facebook’s end users engaged in a mutual hostage-taking that kept them glued to the platform. Then Facebook exploited that hostage situation, withdrawing the surplus from end users and allocating it to two groups of business customers: advertisers and publishers.

To the advertisers, Facebook said: Remember when we told those rubes we wouldn’t spy on them? Well, we do. And we will sell you access to that data in the form of fine-grained ad-targeting. Your ads are dirt cheap to serve, and we’ll spare no expense to make sure that when you pay for an ad, a real human sees it.

To the publishers, Facebook said: Remember when we told those rubes we would only show them the things they asked to see? Ha! Upload short excerpts from your website, append a link and we will cram it into the eyeballs of users who never asked to see it. We are offering you a free traffic funnel that will drive millions of users to your website to monetise as you please. And so advertisers and publishers became stuck to the platform, too.

Users, advertisers, publishers — everyone was locked in. Which meant it was time for the third stage of enshittification: withdrawing surplus from everyone and handing it to Facebook’s shareholders.

For the users, that meant dialling down the share of content from accounts you followed to a homeopathic dose, and filling the resulting void with ads and pay-to-boost content from publishers. For advertisers, that meant jacking up prices and drawing down anti-fraud enforcement, so advertisers paid much more for ads that were far less likely to be seen. For publishers, this meant algorithmically suppressing the reach of their posts unless they included an ever-larger share of their articles in the excerpt. And then Facebook started to punish publishers for including a link back to their own sites, so they were corralled into posting full text feeds with no links, meaning they became commodity suppliers to Facebook, entirely dependent on the company both for reach and for monetisation.

When any of these groups squawked, Facebook just repeated the lesson that every tech executive learnt in the Darth Vader MBA:

“I have altered the deal. Pray I don’t alter it any further.”

Facebook now enters the most dangerous phase of enshittification. It wants to withdraw all available surplus and leave just enough residual value in the service to keep end users stuck to each other, and business customers stuck to end users, without leaving anything extra on the table, so that every extractable penny is drawn out and returned to its shareholders. (This continued last week, when the company announced a quarterly dividend of 50 cents per share and that it would increase share buybacks by $50bn. The stock jumped.)

But that’s a very brittle equilibrium, because the difference between “I hate this service, but I can’t bring myself to quit,” and “Jesus Christ, why did I wait so long to quit?” is razor-thin.

All it takes is one Cambridge Analytica scandal, one whistleblower, one livestreamed mass-shooting, and users bolt for the exits, and then Facebook discovers that network effects are a double-edged sword. If users can’t leave because everyone else is staying, when everyone starts to leave, there’s no reason not to go. That’s terminal enshittification.

This phase is usually accompanied by panic, which tech euphemistically calls “pivoting”. Which is how we get pivots such as: In the future, all internet users will be transformed into legless, sexless, low-polygon, heavily surveilled cartoon characters in a virtual world called the “metaverse”.

That’s the procession of enshittification. But that doesn’t tell you why everything is enshittifying right now and, without those details, we can’t know what to do about it. What is it about this moment that led to the Great Enshittening? Was it the end of the zero-interest rate policy (ZIRP)? Was it a change in leadership at the tech giants?

Is Mercury in retrograde?

And now, it’s Youtube’s turn. They’ve been going down this road for a long time, since they started “demonetizing” people. Youtube sells advertising which is supposed to accompany each video, the videos which get a certain level of views are “monetized” - Youtube agrees to share a percentage of the ad revenues with the creators. But Youtube from time to time “demonetizes” creators - and still runs the ads, but now collects all of the revenues.

It’s a form of fraud, really, people get suckered onto the platform, and then when the ad revenue gets big enough, voilá, Youtube takes all of the ad revenues - they of course cite … reasons … which are pretty sketchy, but all of the enshittified platforms are sketchy operations. The creator ends up having to do sponsorships, or crowdfunding support, because making videos is expensive and takes a lot of time and work.

So creators are defrauded of money and end up working for free for Youtube - and the other platforms like Facebook - which are entirely dependent on user-supplied content. It’s a billion-dollar scam, and it works - does it ever work. Sure, creators could walk away - but they lose any sort of contact with their subscriber list, because that is controlled by the platform - Youtube, Facebook, it’s all the same deal.

And these platforms have successfully avoided any anti-trust enforcement, even though they routinely buy out competitors - or run them out of business. Billions of dollars buys a lot of political clout - and those billions come from fraud and abuse. I haven’t been on Facebook for over three years, even though I get weekly emails from them trying to entice me back - “see what XXXX is saying about you” or “XXXX tagged you in a post” and the like. No deal.

This isn’t what the Internet was intended to be …

There’s a commonality with all of this AI junk, you can catch it pretty quickly once you’ve figured it out:

Highly emotional content

Images that are unlikely in real life

Few if any traceable details - there will be a constellation of similar facts available, but no direct confirmation of facts asserted.

Subtitles which smoothly ape the voice-over exactly - example in the first video, “1940’s” spoken as “one-ninety-four zero apostrophe ess”.

And so forth and so on.